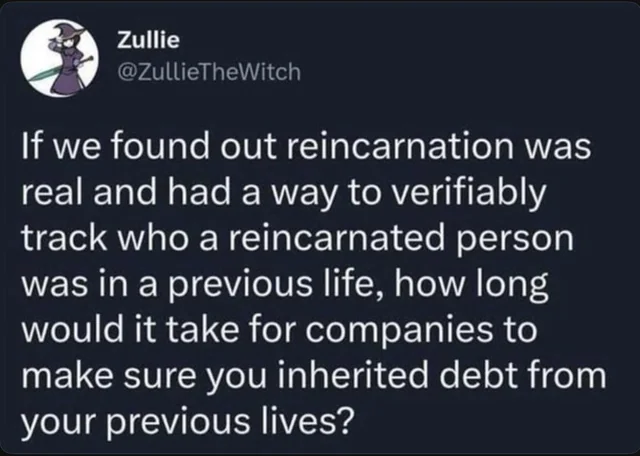

The post above inspired this story.

Reincarnation: Inheritance

It was always said your debts died with you.

However, that was before they discovered reincarnation was real.

At first, it was a miracle. Scientists unveiled the SoulPrint protocol, quantum mapping that could trace the consciousness of a person across lifetimes. Proof, they said, that the soul persisted.

Proof of justice, of continuity, of something greater than death.

For a brief moment, humanity believed it might finally mean something.

Then the corporations stepped in.

If you could inherit wealth, they argued, then you could inherit debt.

They called it, karmic enforcement.

Now, not even in death could you escape capitalist greed.

For people like Vale, that greed didn’t stop at the grave.

It was stitched into every contract, every scan, every form signed in a moment of desperation.

She had once dreamed of designing things that made life better: low-cost sensors, water filters for field kits, clean power retrofits for housing blocks. But none of it paid. Not fast enough.

Now she picked up whatever freelance work she could get: repair jobs, obsolete code patches,

things other people didn’t want to touch.

Because surviving came before dreams.

Then came the letter.

By the time Vale received it, the debt had already been accruing interest for over a century.

A paper envelope. Not a netmsg, real paper, an expensive rarity.

Embossed with a silver seal: Credit Continuity Division.

Inside, the summary was short:

You have been identified via certified quantum continuity as the reincarnation of one

Elias B. Trenholm, digital ID #TRN-1975-NYC.

As such, you are now legally liable for the outstanding balance attached to this identity, per the

Soul Liability Enforcement Act (2031).

Total Due: $1,823,566.72

Minimum Due: $45,000 by 06/01/2040

The second page showed a grayscale photo of a man in his thirties. His eyes were hollow, his expression blank. Born 1975. Died 2009. Systems engineer. Two failed tech startups (2001, 2007). Six-figure student debt from a shuttered for-profit college. Subprime mortgage default in 2008. Bankruptcy filed months before death.

His financial ruin had been meticulously preserved by NovaCredit Systems, Modia Federal Loan Servicing, and LexinTrust Analytics; all backers and lobbyists for the Soul Liability Enforcement Act.

At first, Vale laughed. Soul ID?

But the document bore a federal trace code from the Bureau of Reincarnative Affairs.

She checked. It matched.

Ten years ago, SoulPrint had been opt-in. Then the loopholes closed.

Now it was automatic, assigned at birth, embedded in hospital records, linked to biometric scans.

Her last emergency room visit must’ve triggered the match. She’d signed intake forms without thinking.

You could challenge a match. But the legal fees were higher than the debt. That was the point.

She didn’t remember being Elias. That didn’t matter.

At the Bureau office, the clerk didn’t even look up. Just scanned her ID band and printed the form.

“You should feel lucky,” the woman said. “Some people come back with ten debts across five centuries. At least you only had one life before.”

“That we know of,” Vale muttered.

The clerk slid a pamphlet across the desk.

Reparation Through Identity: Fulfilling Karmic Responsibility the Ethical Way.

“You have until the end of the month. If you can’t pay, the system defaults to Neural Offset.”

Vale blinked. “You mean you take memories.”

“Only non-essential ones. Redundancies. Nostalgia, dream patterns, sensory imprints. The process is non-invasive and fully compliant with consciousness retention regulations.”

“And if I refuse?”

The clerk looked up for the first time. “Then the debt rolls forward to your next incarnation. With penalties.”

Vale already knew the pitch. She’d seen the ads: clean clinics, soft music, testimonials from smiling people who couldn’t remember what they were so worried about.

But there were darker threads in the forums: screenshots, whistleblower leaks, buried research studies.

The memories they took: smells, regrets, first kisses, final goodbyes. Were stripped, tagged, and fed into commercial neurogrids. Used to train empathy simulators, targeted ad engines, interrogation AIs.

Neural Offset wasn’t just debt relief. It was asset extraction.

Vale sold everything she could. Maxed freelance shifts. Cut her meals in half.

But there was no making the kind of money she needed before the deadline.

Eventually, she stopped looking at her bank balance and started looking for answers instead.

She had started learning about Elias.

At first, she was just looking for a loophole, anything in his record that might reduce the debt or delay the offset. But the more she read, the worse it felt. He’d lived in a time before SoulPrints, before karmic enforcement, before any of this was possible. He couldn’t have known.

And yet she kept wondering: if he had, would he have lived differently?

Will anyone now, knowing what we know?

She kept reading. Elias’s patents read like sketches of the future: modular energy grids, urban vertical farms, autonomous repair drones. Most were filed and forgotten. Others were quietly bought, shelved, or rebranded. He was never credited for any of them.

The rest of his record told a common story, high interest student loans, lawsuits from creditors, a foreclosure in November 2008. Not scandal. Just failure in a system designed to let people fall through the cracks.

His obituary had labeled him:

Elias Trenholm – A dreamer who didn’t quit.

Vale stared at the line for a long time. It wasn’t fair, but it was familiar—dreaming, trying, failing, being forgotten. She didn’t remember his life, but now she was the one paying for it.

On the final day, she sat in the NeuroConvert clinic, cold metal pressed to her temples.

“You’ll forget some things,” the tech said. “Maybe a childhood smell. A favorite street. A name you only said once. Nothing essential.”

Vale nodded.

Then she asked, “Will I remember any of this?”

The tech didn’t answer.

The machine hummed.

She closed her eyes.

Three days later, a netmsg arrived:

NOTICE: Payment Received in Full.

Neural Offset Process Complete.

Debt Resolved.

Friendly reminder: Additional debts from undocumented lives may still be identified pending future audits.

She stared at the screen. A hollow space stirred behind her eyes.

Whatever had been there was gone. But something else remained.

Then, without knowing why, she opened a new document and typed:

A dreamer who didn’t quit.